CZS supported AI projects – session 2

13:20 AM – 14:15 PM

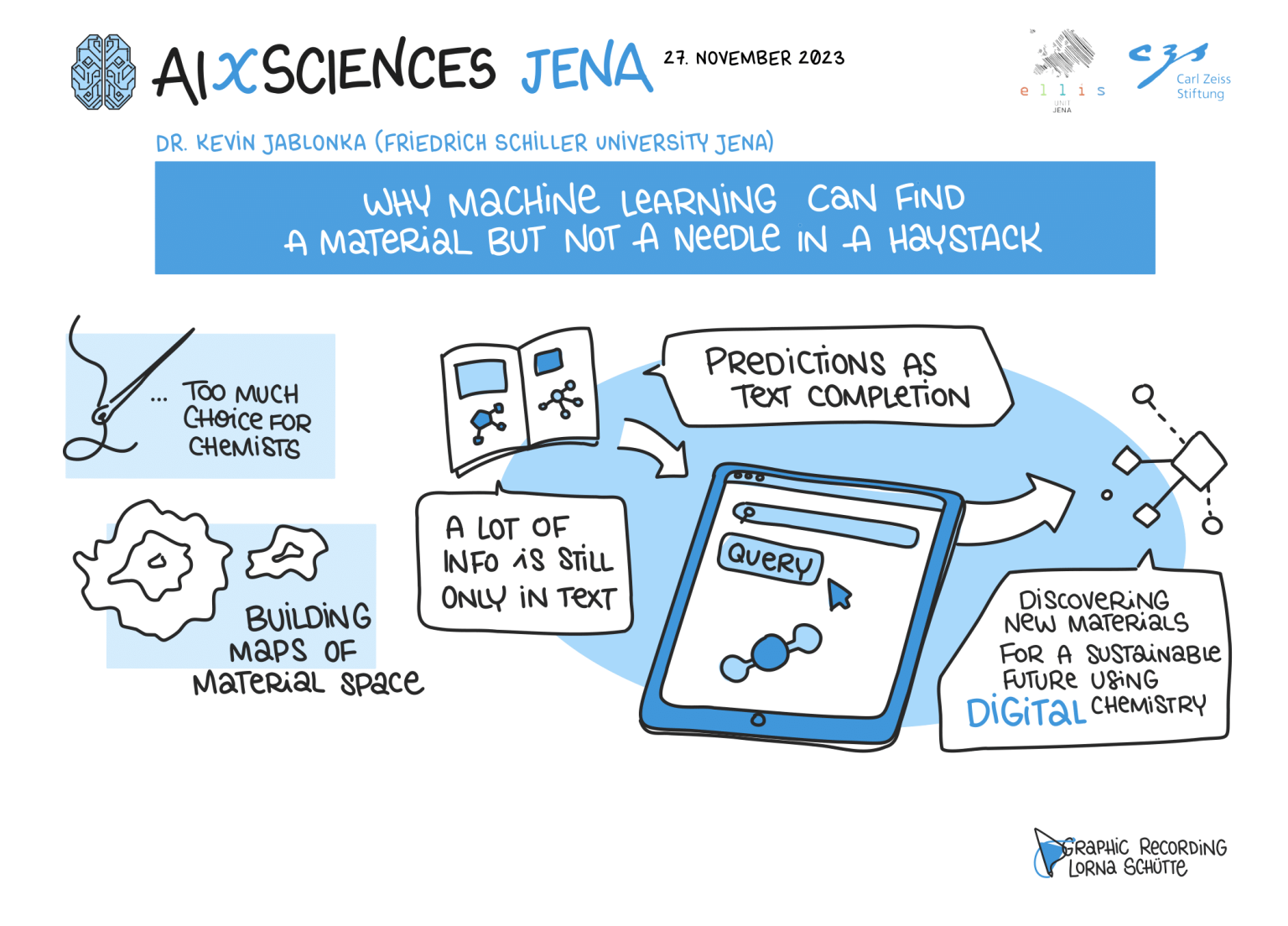

Why machine learning can find a material but not a needle in a haystack

The space of possible materials is unimaginably large. To find our way in this space, having a map that can guide us would be nice. In this presentation, we show that machine learning can provide us with such a map. We can use machine learning to encode patterns that are tacit or hidden in a large number of dimensions of this chemical space, and then use it to guide the design of materials. The simplest application of this navigation system is to predict properties that are hard to predict with conventional quantum chemistry or molecular simulation alone.

Once we have this in place, we can use it to most efficiently gather information about structure-property-function relationships. A fundamental difficulty here is, however, that we often have to deal with multiple, often competing objectives. For instance, increasing the reactivity often decreases the selectivity. Interestingly, one can show that using a geometric construction; one can also effectively, and without bias, use machine learning to dramatically accelerate materials design and discovery in such a multiobjective design space. It is important to realize, however, that machine learning relies on data that a machine can use. Toward this goal, we need to develop infrastructure to allow for the capture without overhead while providing chemists with tools that simplify their daily work. A challenge, however, is that data typically cannot be easily collected in this nice tabular firm. Recent advantages of applying large language models (LLMs) to chemistry indicate that they might be used to address this challenge. I will showcase how LLMs can autonomously use tools, leverage structured data as well as soft inductive biases, and, in this way, transform how we model chemistry.

Speaker and Project leader

Kevin Jablonka obtained his bachelor’s degree in chemistry at TU Munich. He joined EPFL for his master’s studies (and an extended study degree in applied machine learning), after which he joined Berend Smit’s group for a Ph.D. He now leads a research group at the Helmholtz Institute for Polymers in Energy Applications of the University of Jena and the Helmholtz Center Berlin. Kevin’s research interests are in the digitization of chemistry. For this, he has been contributing to the cheminfo electronic lab notebook ecosystem. He also developed a toolbox for digital reticular chemistry. Using tools from this toolbox, he addressed questions from the atom to the pilot-plant scale. Kevin is also interested in using large language models in chemistry and co-leads the ChemNLP project (with support from OpenBioML.org and Stability.AI).

Learning from experience and making predictions that will guide future actions are at the core of intelligence. These tasks need to embrace uncertainty to avoid the risk of drawing wrong conclusions or making bad decisions. The sources of uncertainty are manifold ranging from measurement noise, missing information, and insufficient data to uncertainty about good parameter values or the adequacy of a model. Probability theory offers a framework to represent uncertainty in the form of probabilistic models. The rules of probability theory allow us to manipulate and integrate uncertain evidence in a consistent manner. Based on these rules, we can make inferences about the world in the context of a given model. In this sense, probability theory can be viewed as an extended logic.